Proposed Framework

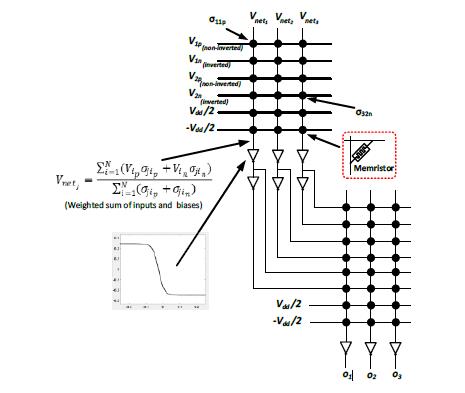

The fully connected (FC) junction memristive circuit, shown in Fig. 1, has a better performance, lower power consumption, higher energy efficiency, and smaller area than other memristive neuromorphic circuits such as the op-amp based circuit proposed in [8]. For this circuit, the dot-product operation is performed in the memristive crossbar while the inverters implement the neuron’s nonlinear operation, i.e. activation function. The circuit, which has differential inputs, makes use of two memristors per weight, implementing both negative (𝑛) and positive (𝑝) weights.

Experimental Results

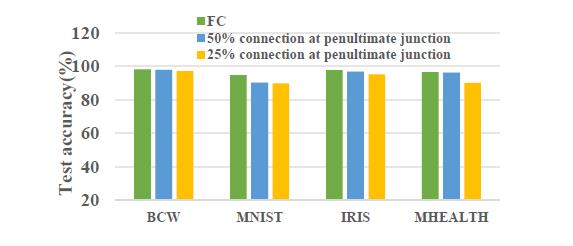

We have used IRIS, BCW, MNIST and MHEALTH datasets for the performance measurement. 80% data of a dataset are randomly chosen for training whereas the rest 20% are used for test accuracy measurement. Table I provides the details of the network structures for each of the classification dataset and Fig. 7 provides their accuracy under a different percentage of network connections (or in other words, under a different level of sparsity). It is clear from the results in Fig. 7 that with sparse connectivity at the penultimate junction (junction 𝐽−1 for a network with 𝐽 junctions) having connectivity as low as 25% the network’s classification accuracy is hardly degraded. To train the networks we ran 10,000 epochs and used a mini-batch size of 1.