DCNN Architecture Overview

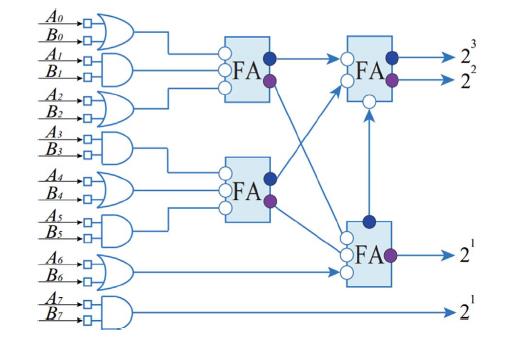

A general DCNN is composed of a stack of convolutional layers, pooling layers, and fully-connected layers. A convolutional layer is followed by a pooling layer, which extracts features from raw inputs or the previous feature maps. A fully connected layer aggregates the high level features, and a softmax regression is applied to derive the final output. The basic component of DCNN is the Feature Extraction Block (FEB), which conducts inner product, pooling and activation operations.

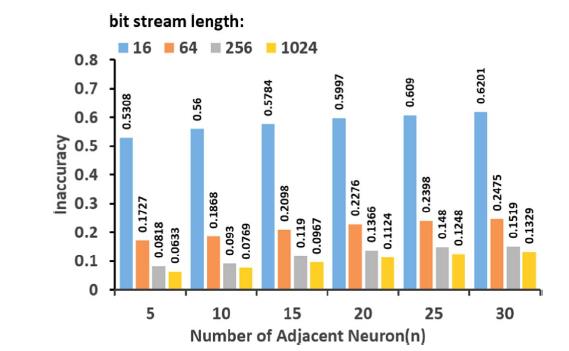

Experimental Results

In this section, we present (i) performance evaluation of the SC FEBs, (ii) performance evaluation of the proposed SC-LRN design, and (iii) impact of SC-LRN and dropout on the overall DCNN performance. The FEBs and DCNNs are synthesized in Synopsys Design Compiler with the 45 nm Nangate Library using Verilog.